Building Resilience Against Misinformation in a Monumental Election Year

At the core of our hyperconnected reality, where the digital and physical worlds blur into one another, the need for – and oftentimes absence of – genuine human connection is unavoidably stark. At our most connected, we are also more lonely and isolated than ever.

There’s an obvious paradox here: While we’re intricately linked, sharing everything from our most intimate life events and professional milestones, our capacity to forge genuine human connections appears diminished. The essence of trust in another seems to be slipping through the digital cracks. A report suggests that distrust has become society’s default emotion.

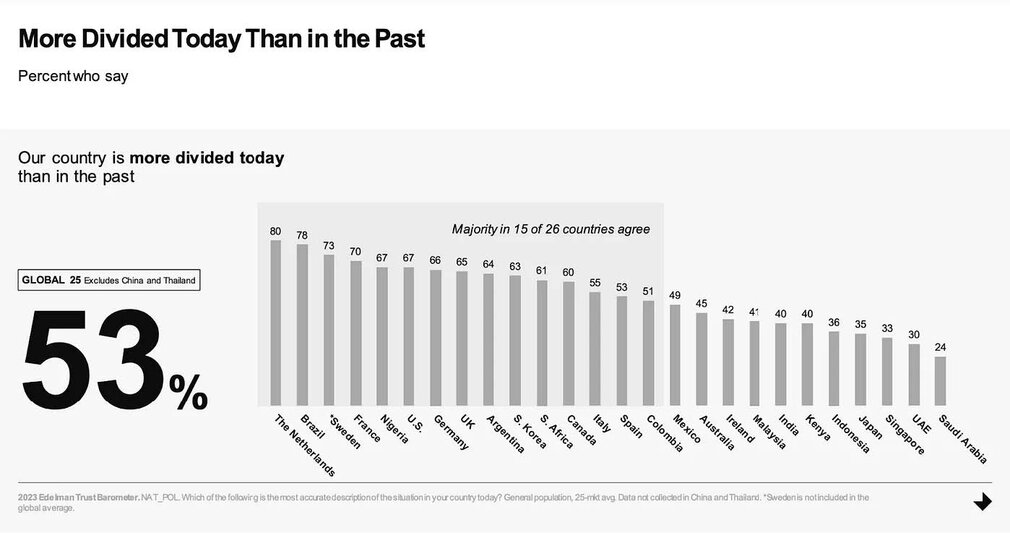

When distrust is the default – we lack the ability to debate or collaborate. When trust is absent, division thrives.

By now we know that social media, which holds the power to build bridges, can facilitate, accelerate, and further deepen this very chasm. On the darker side of the internet, we are asked to navigate anything from fraud, abuse, and vicariously traumatic content to sophisticated and coordinated disinformation campaigns designed to have us question electoral processes and democracy.

Perhaps it’s time to move beyond the notion that misinformation achieves its goal solely when individuals or groups adopt a specific (false) belief. Instead, we might consider that the real damage is done when people find themselves in a state of confusion, unable to discern what to believe at all.The emergence of AI-generated content intensifies this effect, making us question the trustworthiness of all online information. This is when misinformation thrives.

Research suggests that false and unverified information can travel faster and further than any other information online and that once it is out, it tends to stick. To make matters more complicated, research also suggests that reactive efforts that aim to undo and correct misinformation, can risk making the content appear as more credible and truthful by simply repeating it (something psychologists refer to as ‘the illusory truth effect’ and the ‘continued influence effect’).

This raises an important question: Instead of attempting to keep up with if not outpace misinformation, how can we build psychological resilience that prevents its spread from the outset?

Over the past seven years, my colleagues at the University of Cambridge, alongside partners including the UK Cabinet Office and Google, have developed an intervention based on inoculation theory. By prebunking – exposing individuals to weakened forms of misinformation – we foster 'mental antibodies' that enhance the ability to identify and resist manipulative content.

This proactive strategy is crucial in an election year impacting over half the world's population, where the integrity of democracies is at stake.

Our promising results demonstrate that by empowering individuals to recognize and counter misinformation, we can protect societies from its corrosive effects and strengthen democratic resilience. I am particularly excited to have contributed to the largest prebunking campaign to date - an EU-wide initiative ahead of the parliamentary election that will launch this May.

By applying our research in this real-world context, we aim to demonstrate the tangible benefits of our work and significantly contribute to the preservation of electoral integrity across Europe.

Forbes 30 under 30 Honoree

Dr. Basol is the CEO & Founder of Pulse, an innovation lab that is crafting human-centred and evidence-based strategies that ensure resilient and responsible technological innovation across society. She is also the co-developer of, Go Viral!, an award-winning inoculation game designed to combat COVID-19 conspiracies and vaccine hesitancy.

OVE Presse

Eschenbachgasse 9

1010 Wien